Before we embark on an a discussion of a recent Nvidia (NVDA) AI investment (no, not the OpenAI one: https://nvidianews.nvidia.com/news/openai-and-nvidia-announce-strategic-partnership-to-deploy-10gw-of-nvidia-systems), we thought we’d start with a more fundamental question: Is Nvidia and the “AI” economy a Ponzi scheme?

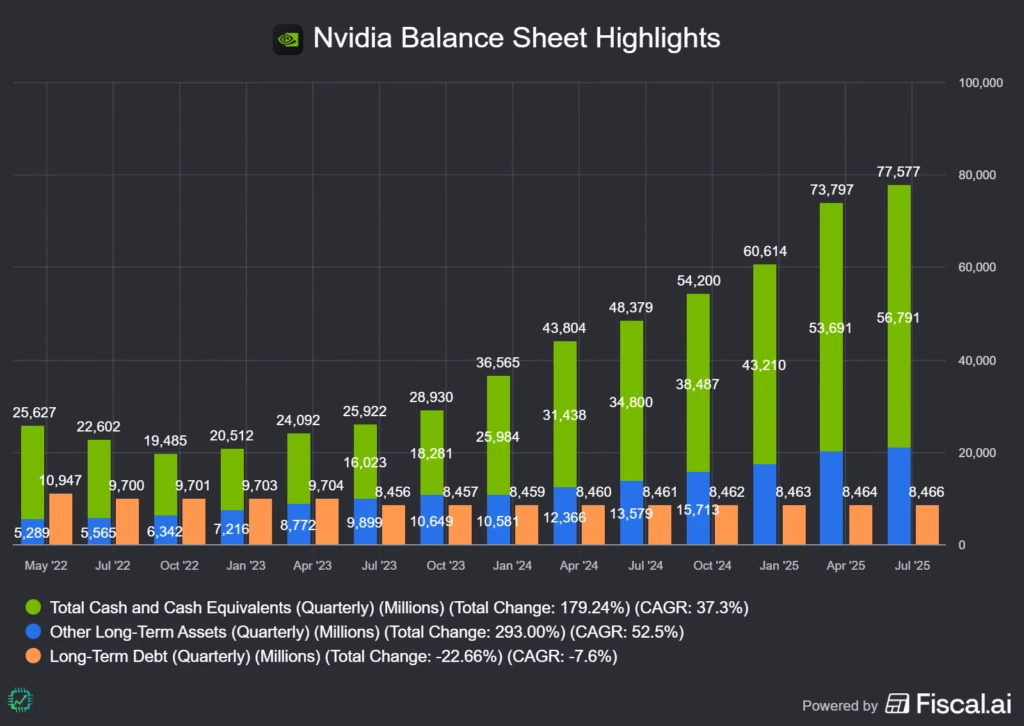

We address this first because, in the last week, we’ve had several different investors across different platforms ask (or tell us) that Nvidia’s investments (included in blue in the chart below, vs. cash and short-term investments in green)) and partner network (including Oracle and OpenAI) are just moving money around, making them a circular Ponzi scheme.

To make financial visuals like the one above, check out Fiscal.ai! And get 15% any paid plan using our special link: fiscal.ai/csi/

But seriously, let’s just define a Ponzi scheme. (The origin of the name, Charles Ponzi, is an intriguing read on the state of the U.S. economy in the 1920s, BTW.) There are three primary components to a Ponzi investment fraud (with counter arguments as to why Nvidia very clearly does not fit the criteria):

- Promise of big returns, with little to no risk

- Who ever said, us included, that NVDA was low risk? We’ve pointed out that, in the last 14 months alone, there have been two 30%+ drawdowns in the stock price (July-August 2024, March-May 2025). If you’re an emotional wreck, or you’re currently in need of your investment money, NVDA may not be the investment for you.

- Using new investor money to pay back old investors

- Nvidia is not raising capital from new equity investors. It is using cash it earned, from its balance sheet, to make equity investments in an ecosystem of strategic partners and customers. If you’re an Nvidia investor and you want out, Nvidia isn’t paying you; the other investor you sell your shares to is paying you.

- No productive business is being done with any investments

- There is no actual cash-flow positive business operation happening in a Ponzi scheme. Money flows through the fraud like a conduit, and the scheme fails when new investor money dries up. Nvidia is a productive operating business that generates profits, and its biggest customers (and most of its smaller ones) are too.

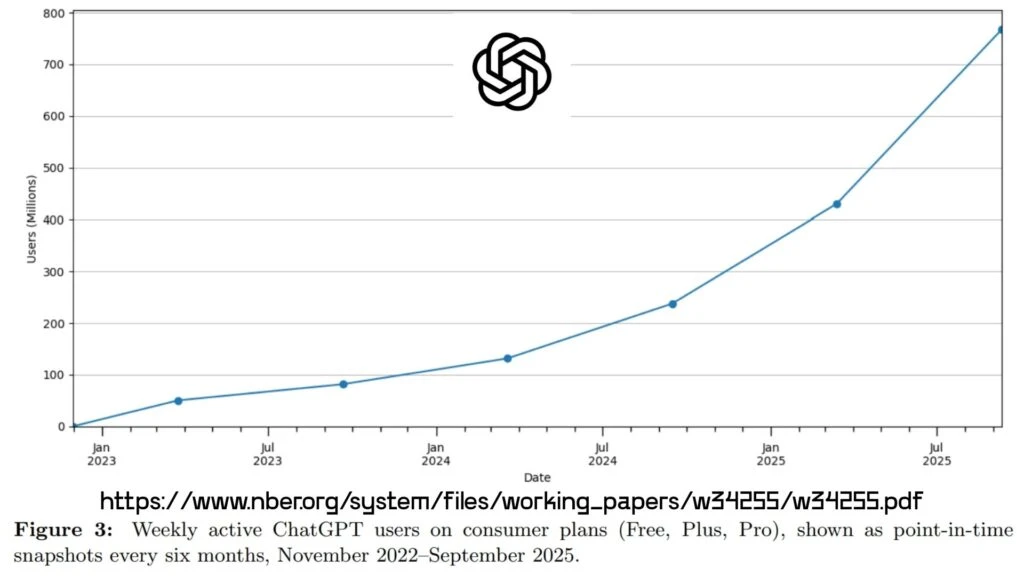

Is it a circular relationship that bears considerable risk for equity investors? Yes. But it’s not a Ponzi scheme (really, OpenAI has over 700 million users and is marching towards 1 billion on a similar track to TikTok; there’s productive business, with actual paying customers, being built: https://www.nber.org/system/files/working_papers/w34255/w34255.pdf).

In fact, new tech markets are frequently funded and promoted in the VC world in this “circular” way, where the investors are also the first product users, and the first product users the investors. It’s how potential new industries are created. It’s hard work, and often a few pioneering leaders eat their own cooking for some time as they flesh out utility for other potential customers elsewhere in the economy. Some of these basic principles are also embedded in this recent video we did about secular growth trends and the start and end of bull markets: https://youtu.be/OoQ3k63dg5k

It’s risky business because its cyclical, but also hard to engineer

Let’s expound on point number three above, “productive business” being developed with equity investments. We have a recent case in point that was overshadowed by all of the Oracle-OpenAI news.

According to a CNBC report last week, Nvidia as “acqui-hired” startup Enfabrica’s CEO, key employees, and licensing rights to the tech for $900 million. Nvidia was an investor in Enfabrica in prior funding rounds as well. No official announcement from Enfabrica nor Nvidia, but no dispelling of the claim either. So, at the very least, we’ll assume Nvidia’s licensing of the tech is true.

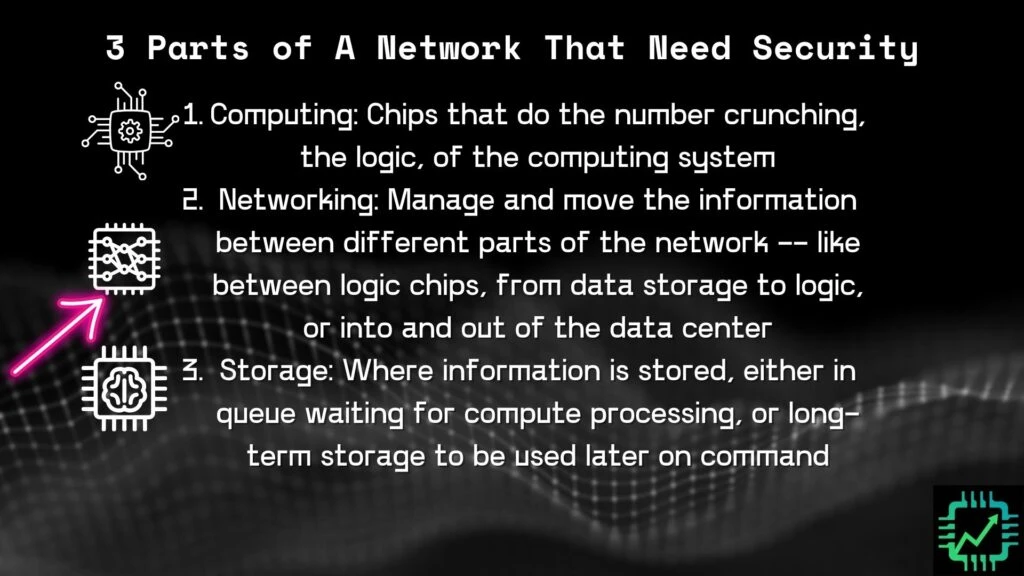

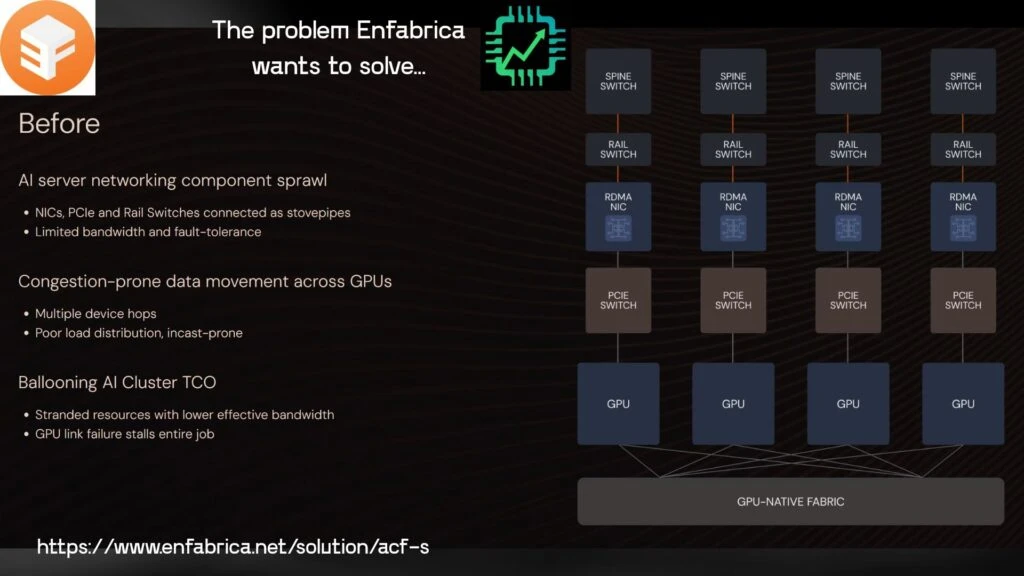

Why? Data centers (we’re gonna ditch the “AI data center” moniker) are scaling up, out, and across, networking many thousands of GPUs together into essentially what Nvidia calls “one big chip” that can handle tremendously large computing workloads. This combines all three main components of any computing system: Logic (GPUs and CPUs), storage (memory chips), and networking (connecting it all together).

Nvidia’s challenge is to keep engineering higher-performance and increasingly energy-efficient (on a per-compute basis) systems that its data center customers need. As a developer of semiconductors and full industrial-scale systems, Nvidia operates in a cyclical industry (currently in a strong growth cycle). That’s the key risk for Nvidia investors. But this engineering work is also increasingly difficult to solve for.

Why Enfabrica, and why now?

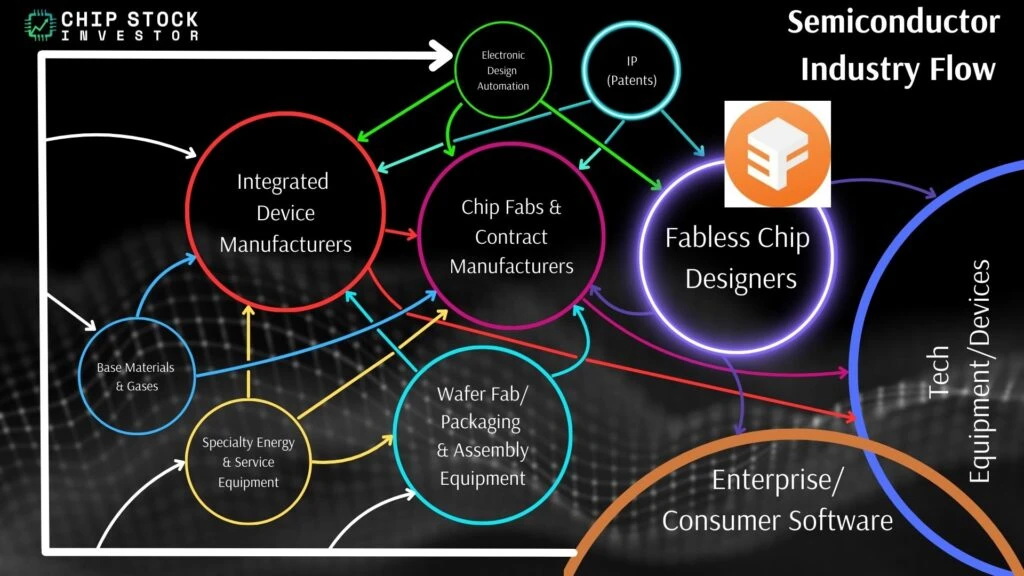

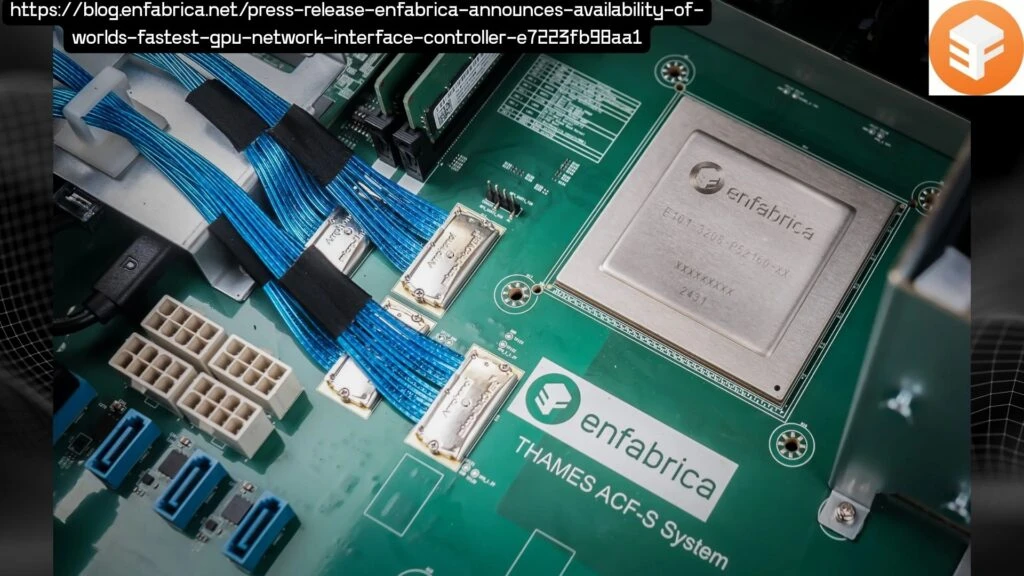

Like Nvidia itself, Enfabrica is a fabless designer of IP, semiconductors, and data center systems.

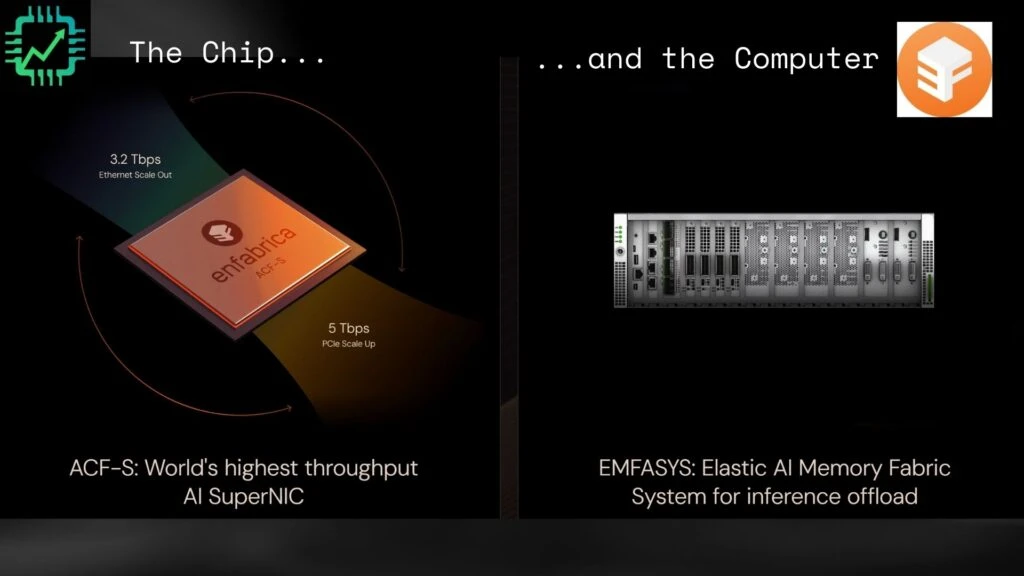

The company’s two primary products are:

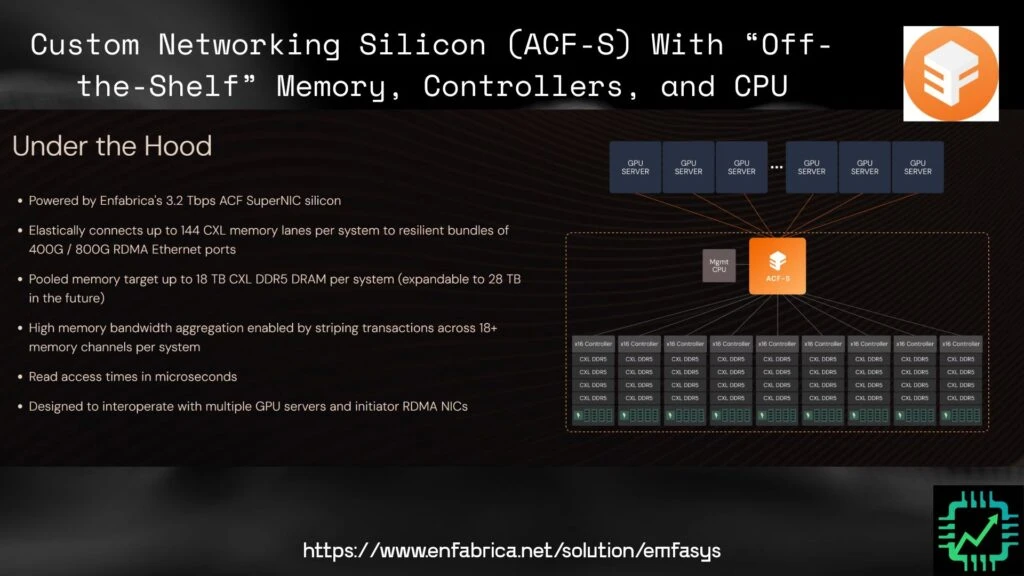

- The ACF-S superNIC (NIC = network interface controller, announced November 2024) used to connect GPUs together in a data center (the enabling of the GPUs as “one big chip” functionality). https://www.enfabrica.net/solution/acf-s https://blogs.nvidia.com/blog/what-is-a-supernic/

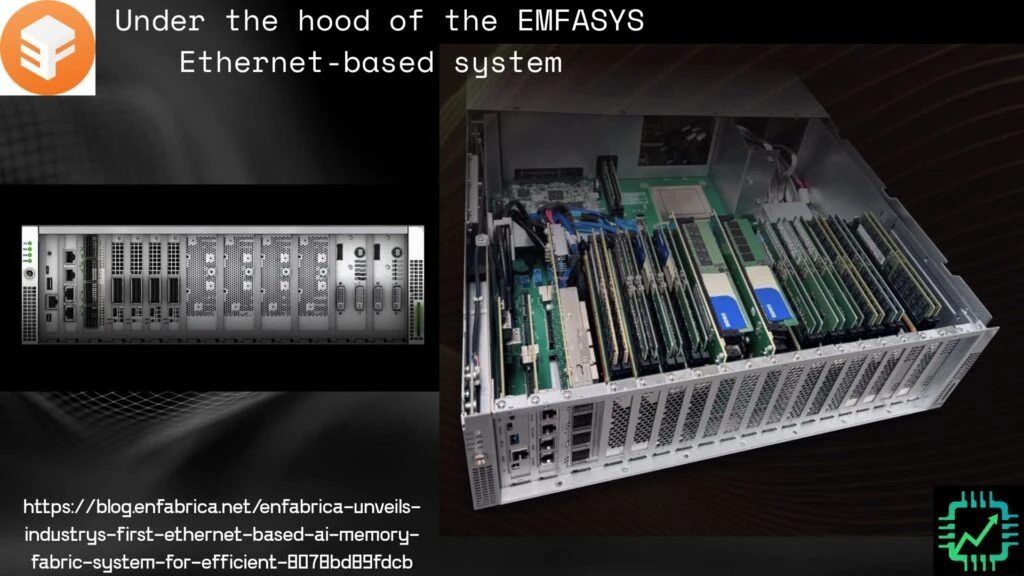

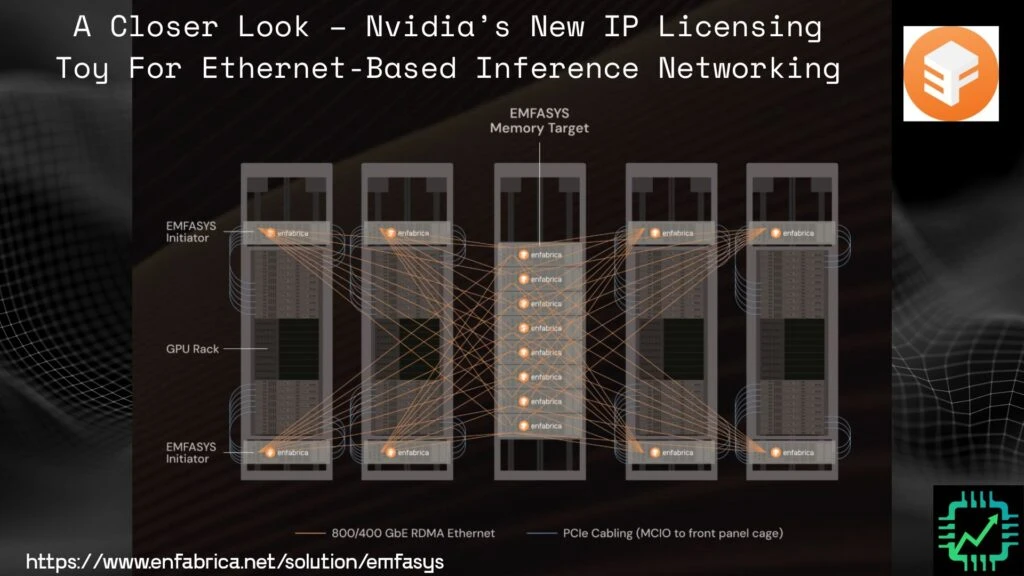

- EMFASYS (Elastic AI Memory Fabric System, announced July 2025), a network server built on the ACF-S superNIC that interconnects GPU servers with data center memory.

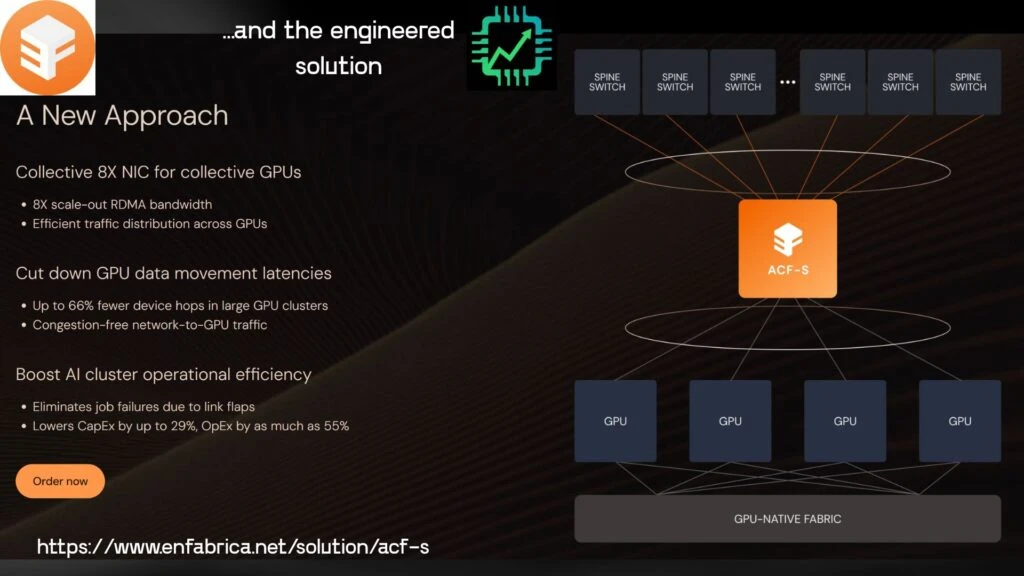

The chip itself is impressive, improving scale-out (distributing workloads across servers) and scale-up (increasing the resource capacity of a server) of Nvidia’s own superNICs and related networking. The ACF-S does this by combining functionality (NIC, PCIe, CXL) onto a single chip.

Enfabrica’s network system is based on open Ethernet standards, naturally, given that’s the preference as AI moves more from training (GPUs connected using Nvidia’s proprietary InfiniBand technology, acquired via Mellanox) to AI inference (using Ethernet). We discussed this at length a year ago in a two-part series on Nvidia and Arista Networks (ANET): https://youtu.be/OuMuLBVcb84 https://youtu.be/gyfRB8E0p6o

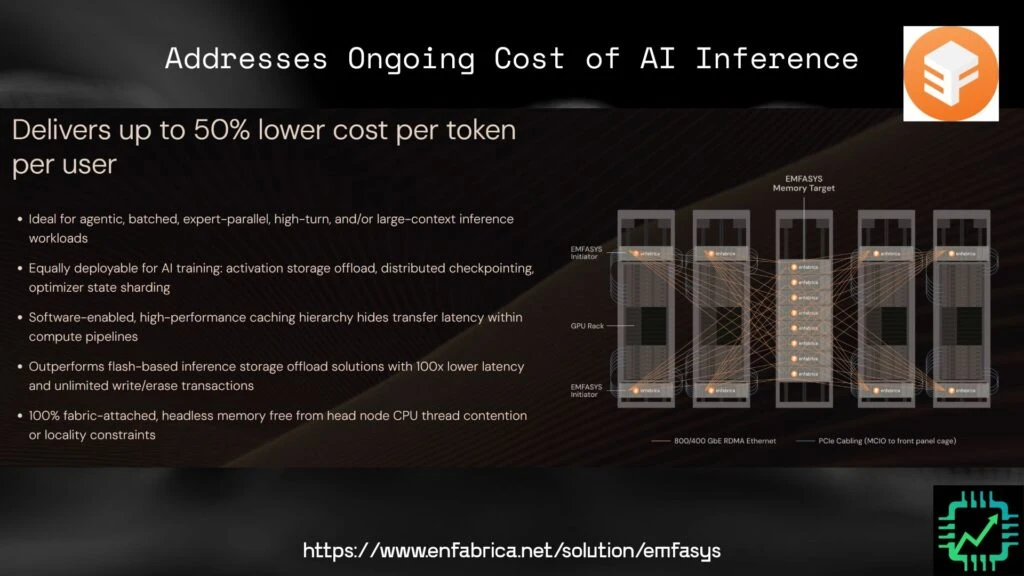

As these AI inference workloads (the AI software getting put to use by users) get bigger and more complex, memory is getting to be a bottleneck. The co-packaged HBM (high-bandwidth memory) alongside the GPUs aren’t enough. That’s where Enfabrica’s (and now Nvidia’s) EMFASYS comes in, allowing GPU servers to cache memory resources in an ultra-efficient system to free up computing power, memory capacity, and bandwidth — all of which lowers cost in delivering output to user queries.

Why it works

A few definitions first:

- Elastic = in computing, this refers to a computing network’s ability to dynamically adjust compute and storage resources based on demand, in this case a data center’s compute and storage resources.

- Bandwidth = the speed at which data can be moved from one part of the system to another, measured in units of data per second; to use a crude analogy, like the number of lanes on a highway and the speed limit for the highway.

- Capacity = the maximum amount of data that can be moved, processed, or stored in memory in a system.

To continue with the same crude analogy as above, think of Enfabrica’s EMFASYS as spare lanes that can be opened up to allow for more cars during times of peak data center use. And in off-peak usage, the system adjusts to maximize the power of the GPU servers.

EMFASYS pulls it off by offloading cached data from the HBM that might need to be used later — addressing the KV (key-value) caching bottleneck: https://huggingface.co/blog/not-lain/kv-caching — onto cheaper DRAM chips. The ACF-S superNIC acts as the controller of the lanes so that any GPU server can access that cached data at any time it needs. And like any good hardware, Enfabrica has developed software to help potential customers make the most of its EMFASYS deployment, based on their unique needs.

Not a Ponzi scheme — the non-technical investor’s takeaway

Nvidia has big customers, and pioneering customers, with complex needs. In an effort to serve them all before a competitor does, Nvidia is deploying its ample balance sheet resources to invest in productive business activity. Sometimes that means investing in existing infrastructure development partners (Intel), signing deployment agreements (Oracle), or acqui-hiring promising new engineering work (Enfabrica). Other times, Nvidia wants to invest in the very large end-markets its infrastructure is enabling (OpenAI).

Whatever the case, Nvidia is making moves it thinks will keep it at the center of the growing “AI universe.” And if you’re an Nvidia shareholder, that’s what you should rightfully expect from Nvidia.

2 Responses

Thanks! Definitely not a Ponzi scheme.