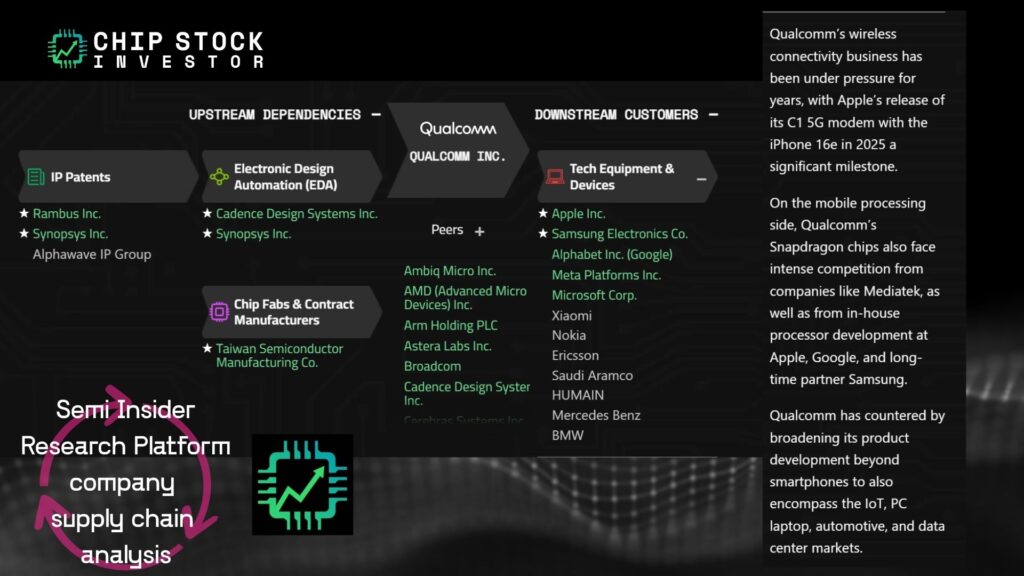

Over on Semi Insider a few months ago, we discussed Qualcomm‘s (QCOM) announced acquisition of Alphawave IP Group and why that was the biggest indicator yet that Qualcomm was going to enter the AI data center market.

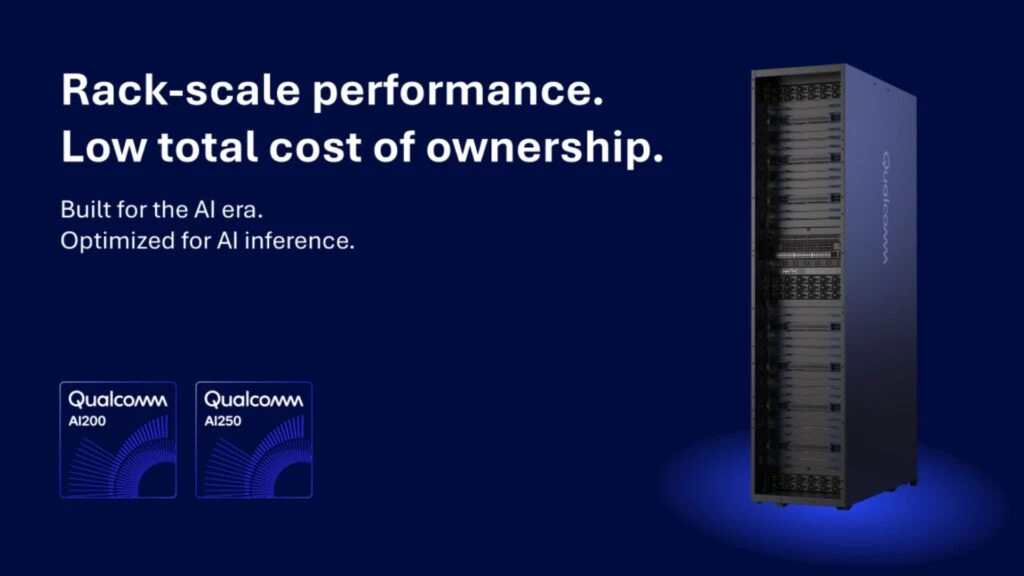

So when the new AI200 and AI250 products were revealed on October 27, 2025, the only surprise was how quickly Qualcomm was able to get itself into position for the AI inference phase of this data center infrastructure buildout cycle. https://www.qualcomm.com/news/releases/2025/10/qualcomm-unveils-ai200-and-ai250-redefining-rack-scale-data-cent

Still, the AI200 won’t make a debut until 2026. The AI250 won’t come out until 2027 — although Qualcomm teased it will include “an innovative memory architecture based on near-memory computing, providing a generational leap in efficiency and performance for AI inference workloads…” Is it too late for QCOM to make a serious splash in this market? And what kind of expectations should investors have?

Qualcomm’s angle, and what is “near-memory compute”?

AI inference — after the AI has been trained, and has been put into use as a chatbot or embedded into software — is the name of the game right now. Nvidia owns the vast majority of the AI training market, and now the race is on to pick up share of inference.

Implementing AI is where economics will play a more public role versus the back-end data center hyperscaler acceleration (internal workload-driven) and AI training market that Nvidia has dominated, especially since 2022. AI startups and existing software companies alike will need lots more computing power to enable new AI features, yes. But they will also need to control costs so that incremental revenue can help them scale to profitability.

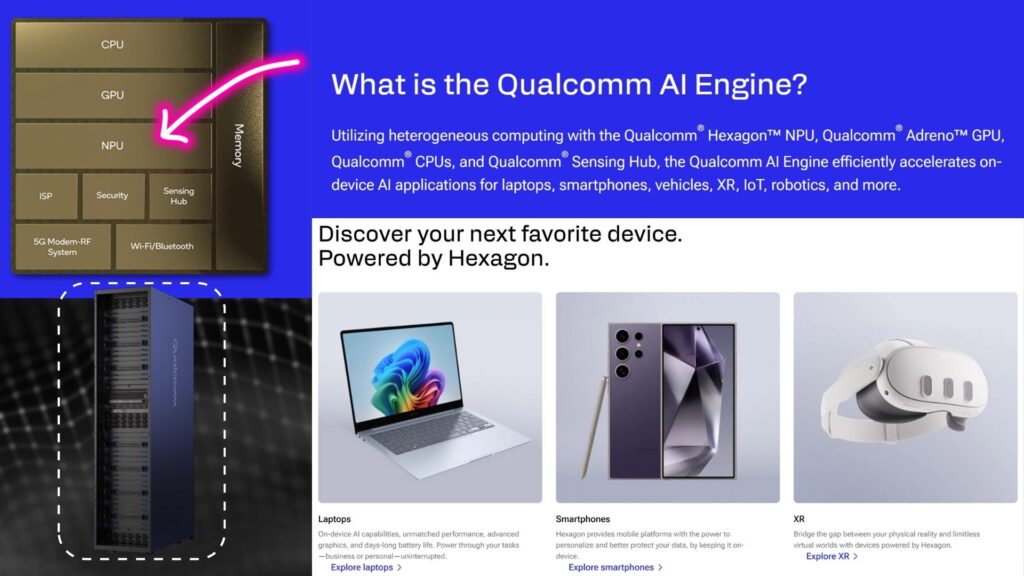

Qualcomm thinks its historically mobile-centric semi designs can offer just that: Power-efficient AI compute. And it makes it clear inference is the market it’s going after in the press release and accompanying visuals. https://www.qualcomm.com/news/releases/2025/10/qualcomm-unveils-ai200-and-ai250-redefining-rack-scale-data-cent

When AI200 begins deployment next year, Saudi Arabia’s Public Investment Fund AI segment HUMAIN appears it will be a first customer. Qualcomm has been doing a lot of R&D work in Saudi Arabia, also announcing industrial IoT and edge AI initiatives with Saudi Aramco in September 2024, and again in May 2025. https://www.qualcomm.com/news/releases/2025/10/humain-and-qualcomm-to-deploy-ai-infrastructure-in-saudi-arabia- https://www.qualcomm.com/news/releases/2024/09/qualcomm-and-aramco-lead-industrial-innovation-with-transformati https://www.qualcomm.com/news/releases/2025/05/qualcomm-and-aramco-digital-to-drive-industry-transformation-thr

But AI inference is getting crowded. Qualcomm’s list of competitors include, and are not limited to:

- Nvidia, naturally

- AMD and its brewing momentum with the MI450 rack-scale accelerators starting in 2026, deployed with OpenAI and Oracle

- Broadcom, Marvell, and smaller ASIC XPU designers like Alchip, working with the hyperscalers on in-house AI silicon

- Intel and its custom AI systems partnership with Nvidia, no products announced yet

- Numerous chip design startups flagging for attention, like Cerebras, Groq, and Tenstorrent, to name but a few

To stand out from the crowd, Qualcomm is of course leaning into its history as a power-efficient mobile chip designer. Additionally, the press release teased that “near-memory computing” architecture. What is that?

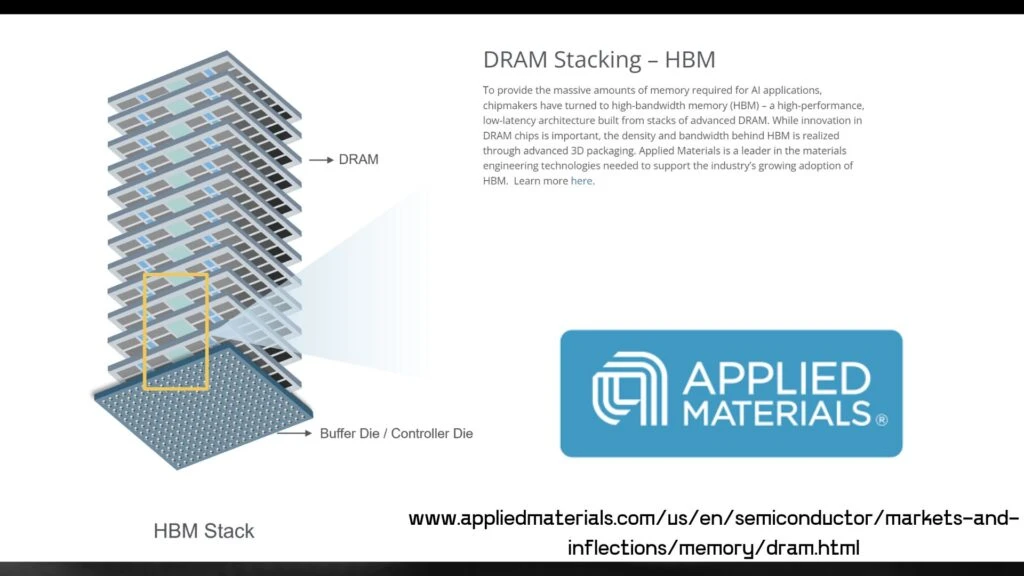

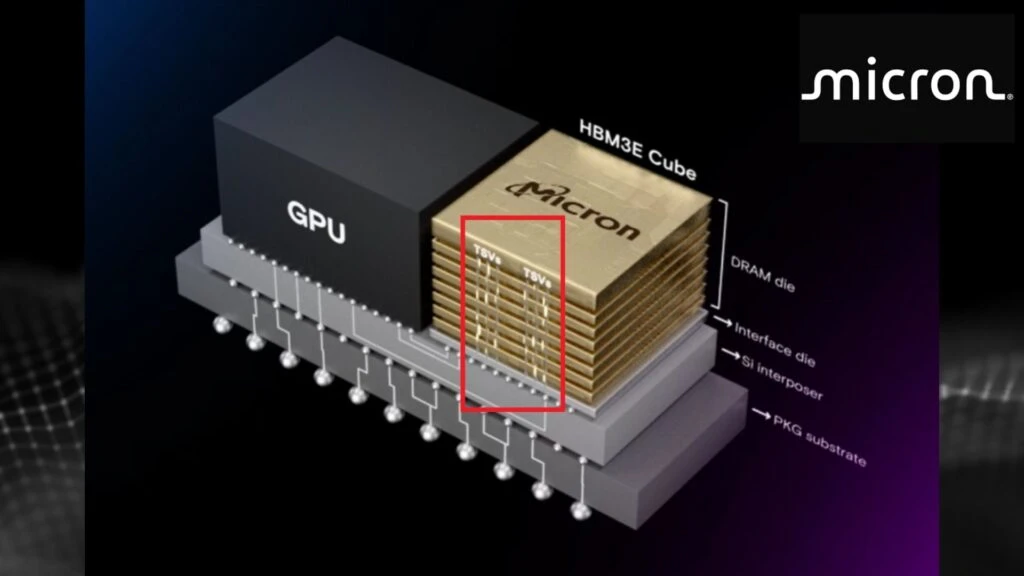

Near-memory computing is a term that has been around for many years in the semi industry. And it’s become a commonplace technology among investors, especially in the form of high-bandwidth memory (HBM) — 3D stacked cubes of DRAM chips co-packaged next to the GPU in data center accelerator systems. That side-by-side GPU with HBM architecture is a type of 2.5D packaging, where two different chips are placed on a common substrate (an interposer) to reduce the memory bottleneck for massive computing workloads like AI.

What could Qualcomm do differently to innovate? Perhaps the near-memory compute architecture they’re referencing for AI250 starting in 2027 is a 3D stack of logic chips directly atop the memory, and interconnected with copper TSVs (through-silicon vias), the same process used to interconnect the 3D stacks of DRAM to make HBM? A long industry roadmap of future innovation in logic architecture awaits. We expect a grand Qualcomm reveal event will be forthcoming, whatever it is they have planned.

What’s Alphawave got to do with it?

After a round of rumors and negotiating, Qualcomm officially announced it was acquiring Alphawave IP in June 2025. It made it clear that the data center AI inference market was the driving force behind the offer. Makes sense. Excluding processors (specifically its NPUs, or neural processing units), Qualcomm’s IP portfolio is on the mobile wireless network side of the market, not the wired infrastructure. It will need the tech to build a network of connected NPU accelerators for data center inference.

Alphawave helps solve Qualcomm’s problem. Alphawave IP spans HBM physical layer and controllers, UCIe die-to-die interconnects, PCIe networking for scale-up (adding more compute power per rack) and Ethernet networking for scale-out (connecting multiple racks), and more. Qualcomm alluded to all of the above in the AI200 and AI250 press release, hinting that its been working with Alphawave on this project already. https://awavesemi.com/silicon-ip/

Assuming the acquisition goes through, owning the IP for the wired infrastructure itself can help Qualcomm control future costs. And just as important, it would help control the roadmap of tech developments as Qualcomm begins to deploy AI200s and AI250s, learn from customer deployments, adjust, and iterate. It’s a virtuous cycle of improvement Qualcomm has been involved in in mobile computing and mobile networking for decades, and now it has a foot in the door in wired technology too.

The Alphawave proposal builds on what has become Qualcomm’s new playbook as it battles intense competition in its legacy smartphone business: Acquire a small company in a target market, merge Qualcomm’s existing tech, and start rolling out new products. It’s how Qualcomm entered the laptop market (acquired Arm-based CPU designer Nuvia in 2021), and how it augmented growth for its now quite substantial Automotive segment (acquired Arriver in 2022).

What’s in it for investors?

AI inference, like AI training, is a new infrastructure market. Estimates from tech researcher Gartner point towards upwards of $500 billion being spent on the total AI data center buildout this year alone, with a cumulative total of trillions of dollars spent globally on the AI race by the end of the decade.

Suffice to say Qualcomm picking up even just a little bit of this expanding pie would be significant in its efforts to diversify away from a smartphone-centric business model. Qualcomm trailing-12-month revenue tallies to $43 billion, and is tracking towards nearing or re-achieving all-time-high sales of $44 billion reached in 2022.

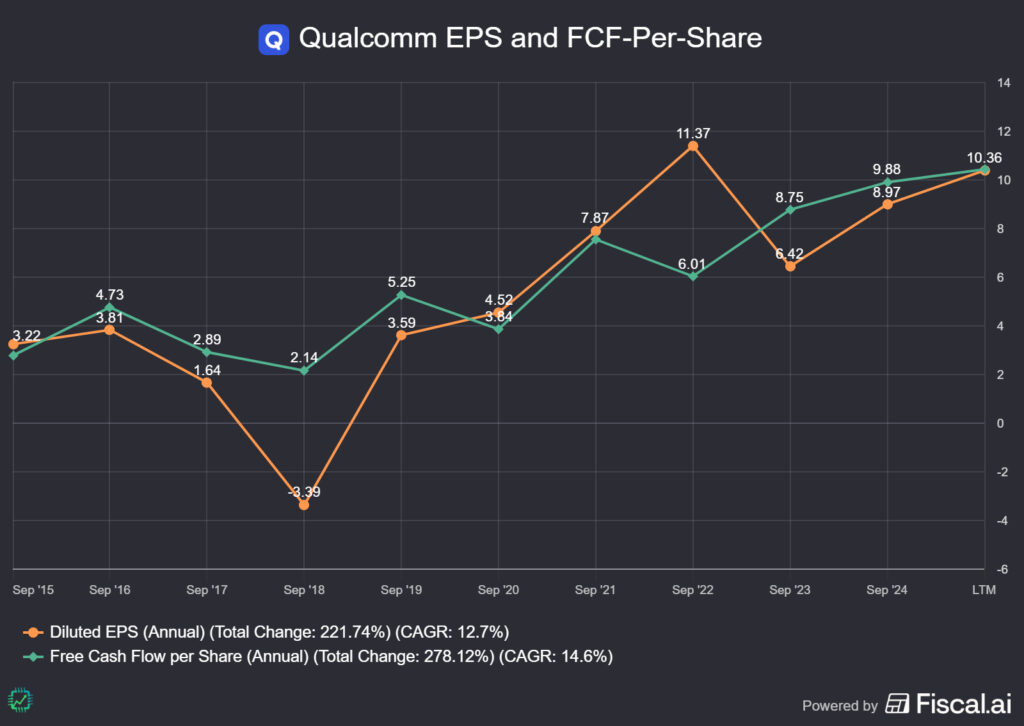

Of greater import, though, is Qualcomm’s focus on per-share profit growth. Despite a lot of turbulence dealing with a pandemic and subsequent bear market, the relationship wind-down with Apple, managing multiple new market product R&D cycles, and poor investor sentiment, Qualcomm has actually done a decent job growing its bottom line for shareholders.

No, it isn’t the fastest growing chip stock, and that’s not likely to change. But if a low- to mid-teens profit growth company is what you need, one with a healthy track record of delivering shareholder returns via rising dividends and share buybacks, Qualcomm might still be worth a look. And if the AI200 and AI250 do start adding incremental revenue and profit for Qualcomm starting next year, perhaps a bit of per-share profit acceleration is in store too.

We’ll circle back to this topic following Qualcomm’s Q4 fiscal 2025 update in November.

See you over on Semi Insider for more of the story, and all of our research!